Generating Surrogate Keys Across Warehouses

Why primary keys are important

We all know one of the most fundamental rules in data is that every table should have a primary key. Primary keys are critical for many reasons:

- They ensure that you don’t have duplicate rows in your table

- They help establish relationships to other tables

- They allow you to quickly identify the grain of the table (ex: the

customerstable with a PK ofcustomer_idhas one row per customer) - You can test them in dbt, to ensure that your data is complete and unique

If you are lucky, your data will come to you with a unique primary key already in place. Maybe it is an id generated by your internal product systems, or maybe you are pulling data in from a third party source that generates the id for you.

Sometimes, however, you find yourself in a situation where you don’t have a tidy primary key.

For example, maybe you have joined your users to the set of features that they use in your product, such that your data should be expected to be unique on the user_id + product_id basis.

The question is - in this situation, how are you supposed to set a primary key? Meet the surrogate key.

What’s a surrogate key?

A surrogate key is a primary key that, instead of existing in your underlying dataset, is derived in the analytics layer itself.

Learning when to use surrogate keys and bring them into your project is a critical skill for any analytics professional.

Knowing when to use a surrogate key is actually quite easy: you should have a surrogate key on any table that doesn’t already have a unique primary key.

Knowing how to create a surrogate key can prove to be much more challenging. The reason for this is that it isn’t always easy to know the best way to implement surrogate keys. Should you just be blocking off time on your calendar every day to individually name each of your rows?

Turns out this is a relatively well-solved problem. To create a surrogate key, you traditionally follow these two steps.

- Concatenate together all of the fields required to make a unique row (for example,

user_idandproduct_id) - Apply a function to create a cryptographic hash (usually using the md5 function) on top of these to generate a unique id per combination of unique values

While the process of creating a surrogate key is relatively well understood, you will be shocked (SHOCKED I SAY) to hear that SQL syntax can have subtle differences across dialects and databases.

Surrogate keys in BigQuery, Databricks, Redshift and Snowflake

BigQuery, Redshift and Snowflake’s concat functions returns null if any of the referenced columns for that row returns a null, so to create a proper surrogate key you’d need to wrap each column in a coalesce before hashing with an md5 function:

md5 ( concat ( coalesce(column1, '_this_used_to_be_null_'), coalesce(column2, '_this_used_to_be_null_') ) )

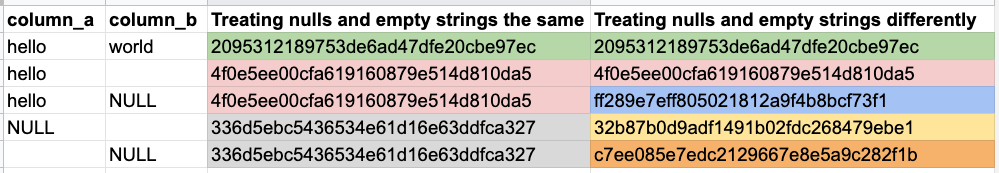

You'll notice that instead of coalescing to a blank string, there is a long replacement string. It doesn't matter what this string is, as long as it's consistent across your project and doesn't appear in any of your data. When you coalesce to a blank string, you can't differentiate between null and '' which sometimes has semantic meaning. Compare the results of these surrogate keys:

Databricks’ concat function docs don’t specifically reference returning null for the concat if one column is null, but I believe that’s what’s meant by The result type matches the argument types.

You could also separate your columns with pipes (||) rather than using the concat function, but I generally stay away from pipes (one comma > two pipes).

Surrogate keys in Postgres

Postgres’ concat function ignores nulls, which saves you from having to wrap each column in a coalesce function to default nulls to a different value (but this has the same drawback shown in the table above, where you can get the same key from different inputs).

If you used || instead of concat, one null column would cause the entire statement would return a null, breaking your concatenation.

So in plain old PostgreSQL, you’d use:

md5 ( concat (column1, column2) )

The null value problem in surrogate keys

The primary annoyance when creating surrogate keys comes when you try and concatenate a row that has a null value for one or more columns. If any value is null, then often the entire concatenated string is returned as null - no good!

with

example_ids as (

select

123 as user_id,

123 as product_id

union all

select

123 as user_id,

null as product_id

union all

select

null as user_id,

123 as product_id

)

select

*,

concat(user_id, product_id) as _surrogate_key

from example_ids

output:

| USER_ID | PRODUCT_ID | _SURROGATE_KEY |

|---|---|---|

| 123 | 123 | 123123 |

| 123 | null | null |

null | 123 | null |

You can get around this by wrapping each of your columns in a coalesce function to default nulls to an alternate value, which is pretty tedious. You can also run into problems if the fields are different datatypes (string vs numeric), so sometimes you need to cast as well.

...

select

*,

concat(

coalesce(cast(user_id as string), '_this_used_to_be_null_'),

coalesce(cast(product_id as string), '_this_used_to_be_null_')

) as _surrogate_key

from example_ids

output:

| USER_ID | PRODUCT_ID | _SURROGATE_KEY |

|---|---|---|

| 123 | 123 | 123123 |

| 123 | null | 123_this_used_to_be_null_ |

null | 123 | _this_used_to_be_null_123 |

Much better! But let's add another row to our dataset:

with

example_ids as (

select

123 as user_id,

123 as product_id

union all

select

123 as user_id,

null as product_id

union all

select

null as user_id,

123 as product_id

union all

select 1231 as user_id,

23 as product_id

)

select

*,

concat(

coalesce(cast(user_id as string), '_this_used_to_be_null_'),

coalesce(cast(product_id as string), '_this_used_to_be_null_')

) as _surrogate_key

from example_ids

output:

| USER_ID | PRODUCT_ID | _SURROGATE_KEY |

|---|---|---|

| 123 | 123 | 123123 |

| 123 | null | 123_this_used_to_be_null_ |

null | 123 | _this_used_to_be_null_123 |

| 1231 | 23 | 123123 |

At first glance, this looks like it works, but in reality there are two identical keys: 123123.

To remedy this, you need to add a separator between fields you wish to concatenate.

...

select

*,

concat(

coalesce(cast(user_id as string), ''),

'|',

coalesce(cast(product_id as string), '')

) as _surrogate_key

from example_ids

output:

| USER_ID | PRODUCT_ID | _SURROGATE_KEY |

|---|---|---|

| 123 | 123 | 123|123 |

| 123 | null | 123|_this_used_to_be_null_ |

null | 123 | _this_used_to_be_null_|123 |

| 1231 | 23 | 1231|23 |

Let’s take a look at how generating surrogate keys specifically looks in practice across data warehouses, and how you can use one simple dbt macro (dbt_utils.generate_surrogate_key) to abstract away the null value problem.

A surrogate_key macro to the rescue

Thanks to a handy function called generate_surrogate_key in the dbt_utils package, you can fire yourself from the business of wrapping your columns in coalesce every time you want to generate a surrogate key.

Forming your surrogate keys with this macro has the benefit of elegant + DRY null handling.

Rather than wrapping your columns in a coalesce function when concatenating them, the macro loops through your columns and coalesces on your behalf, so that you can avoid repeating yourself.

When you call {{ dbt_utils.generate_surrogate_key(['field_a', 'field_b'[,...]]) }}, behind the scenes dbt compiles SQL on your behalf, looping through each field and generating the correct number of coalesce statements with type casting:

coalesce(cast(" ~ field ~ " as " ~ dbt.type_string() ~ "), '_dbt_utils_surrogate_key_null_')

and with conditional logic, adding separator between fields:

{%- if not loop.last %}

{%- set _ = fields.append("'-'") -%}

{%- endif -%}

What does this mean in practice?

Well, you simply don’t have to think about your surrogate keys all that much. On any data warehouse, nulls or no nulls, it just works. Because honestly, who wants to spend more time than they need to thinking about surrogate keys?

Comments