Cache common queries

The dbt Semantic Layer allows you to cache common queries in order to speed up performance and reduce compute on expensive queries.

There are two different types of caching:

- Result caching leverages your data platform's built-in caching layer.

- Declarative caching allows you to pre-warm the cache using saved queries configuration.

While you can use caching to speed up your queries and reduce compute time, knowing the difference between the two depends on your use case:

- Result caching happens automatically by leveraging your data platform's cache.

- Declarative caching allows you to 'declare' the queries you specifically want to cache. With declarative caching, you need to anticipate which queries you want to cache.

- Declarative caching also allows you to dynamically filter your dashboards without losing the performance benefits of caching. This works because filters on dimensions (that are already in a saved query config) will use the cache.

Prerequisites

- dbt Cloud Team or Enterprise plan.

- dbt Cloud environments that are "Versionless".

- A successful job run and production environment.

- For declarative caching, you need to have exports defined in your saved queries YAML configuration file.

Result caching

Result caching leverages your data platform’s built-in caching layer and features. MetricFlow generates the same SQL for multiple query requests, this means it can take advantage of your data platform’s cache. Double-check your data platform's specifications.

Here's how caching works, using Snowflake as an example, and should be similar across other data platforms:

- Run from cold cache — When you run a semantic layer query from your BI tool that hasn't been executed in the past 24 hours, the query scans the entire dataset and doesn't use the cache.

- Run from warm cache — If you rerun the same query after 1 hour, the SQL generated and executed on Snowflake remains the same. On Snowflake, the result cache is set per user for 24 hours, which allows the repeated query to use the cache and return results faster.

Different data platforms might have different caching layers and cache invalidation rules. Here's a list of resources on how caching works on some common data platforms:

Declarative caching

Declarative caching enables you to pre-warm the cache using saved queries by setting the cache config to true in your saved_queries settings. This is useful for optimizing performance for key dashboards or common ad-hoc query requests.

Declarative caching also allows you to dynamically filter your dashboards without losing the performance benefits of caching. This works because filters on dimensions (that are already in a saved query config) will use the cache.

For example, if you filter a metric by geographical region on a dashboard, the query will hit the cache, ensuring faster results. This also removes the need to create separate saved queries with static filters.

For configuration details, refer to Declarative caching setup.

How declarative caching works:

- Make sure your saved queries YAML configuration file has exports defined.

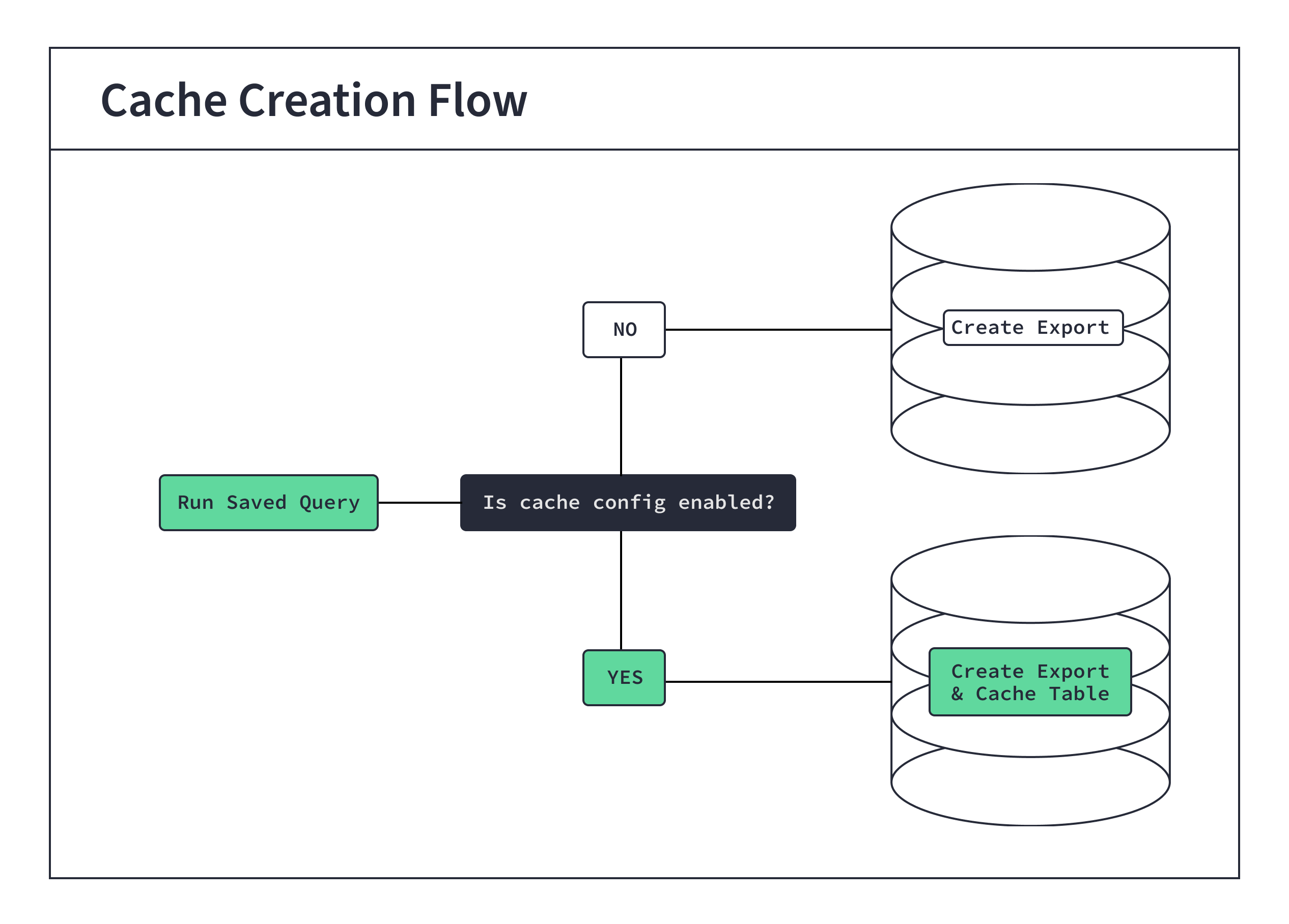

- Running a saved query triggers the dbt Semantic Layer to:

- Build a cached table from a saved query, with exports defined, into your data platform.

- Make sure any query requests that match the saved query's inputs use the cache, returning data more quickly.

- Automatically invalidates the cache when it detects new and fresh data in any upstream models related to the metrics in your cached table.

- Refreshes (or rebuilds) the cache the next time you run the saved query.

📹 Check out this video demo to see how declarative caching works!

This video demonstrates the concept of declarative caching, how to run it using the dbt Cloud scheduler, and how fast your dashboards load as a result.

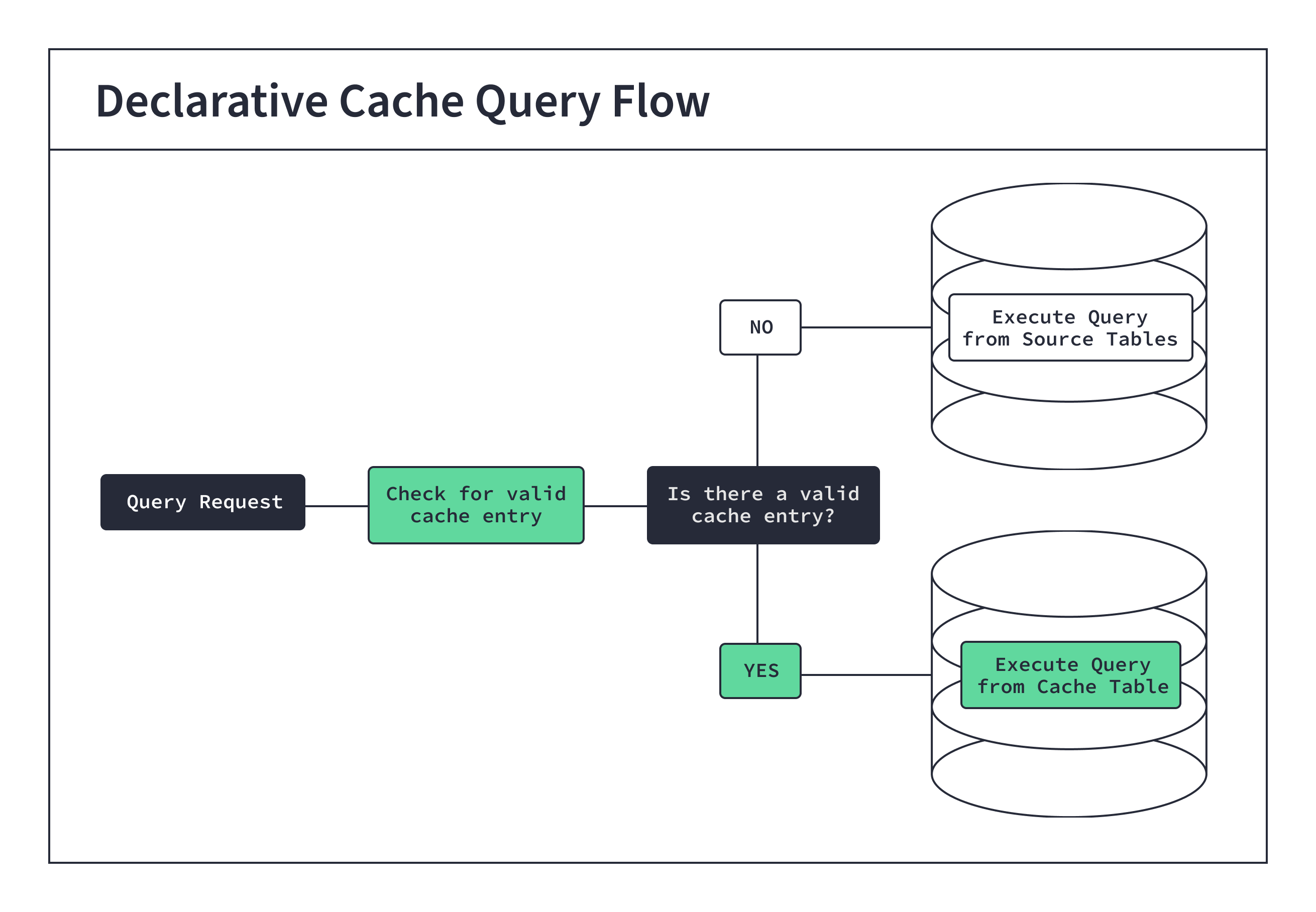

Refer to the following diagram, which illustrates what happens when the dbt Semantic Layer receives a query request:

Declarative caching setup

To populate the cache, you need to configure an export in your saved query YAML file configuration and set the cache config to true. You can't cache a saved query without an export defined.

saved_queries:

- name: my_saved_query

... # Rest of the saved queries configuration.

config:

cache:

enabled: true # Set to true to enable, defaults to false.

exports:

- name: order_data_key_metrics

config:

export_as: table

To enable saved queries at the project level, you can set the saved-queries configuration in the dbt_project.yml file. This saves you time in configuring saved queries in each file:

saved-queries:

my_saved_query:

config:

+cache:

enabled: true

Run your declarative cache

After setting up declarative caching in your YAML configuration, you can now run exports with the dbt Cloud job scheduler to build a cached table from a saved query into your data platform.

- Use exports to set up a job to run a saved query dbt Cloud.

- The dbt Semantic Layer builds a cache table in your data platform in a dedicated

dbt_sl_cacheschema. - The cache schema and tables are created using your deployment credentials. You need to grant read access to this schema for your Semantic Layer user.

- The cache refreshes (or rebuilds) on the same schedule as the saved query job.

After a successful job run, you can go back to your dashboard to experience the speed and benefits of declarative caching.

Cache management

dbt Cloud uses the metadata from your dbt model runs to intelligently manage cache invalidation. When you start a dbt job, it keeps track of the last model runtime and checks the freshness of the metrics upstream of your cache.

If an upstream model has data in it that was created after the cache was created, dbt Cloud invalidates the cache. This means queries won't use outdated cases and will instead query directly from the source data. Stale, outdated cache tables are periodically dropped and dbt Cloud will write a new cache the next time your saved query runs.

You can manually invalidate the cache through the dbt Semantic Layer APIs using the InvalidateCacheResult field.